09 Aug 2017

I often get asked how to get trained up on a given Microsoft technology. Like anything, there is a lot of info out there, so here is a consolidated list where I would get started. You can go to each resource and search for the technology you are interested in. I always start at the free tier then work up from there if I feel I need/want more.

Microsoft Virtual Academy (free)

Good for full overview of tech with both hands on and presentation style.

https://mva.microsoft.com/

Channel9 (free)

Good for learning more about specific part of tech. Often more specific subtopics of a given tech.

https://channel9.msdn.com/

3rd party video content (pay)

More high quality video training to supplement Channel9 and MVA

LinkedIn Learning: https://learning.linkedin.com

Lynda.com: https://www.lynda.com

Pluralsight: https://www.pluralsight.com/

Professional programs (pay)

Very structured Profession role based training that gives you a credential at the end.

Microsoft Academy: https://academy.microsoft.com/en-us/professional-program/

Certification Programs (pay for cert)

If your looking for Certifications the Microsoft Certification program is a good place. You can get good idea of what is covered on the exam (and there for things you should generally know/learn) from the exam prep pages.

https://www.microsoft.com/en-us/learning/certification-overview.aspx

Online/in-person Training with Microsoft Partners (pay)

If you are looking for in-person or online training with instructors you can check out the following resources:

https://www.microsoft.com/en-us/learning/training.aspx

https://www.microsoft.com/en-us/learning/partners.aspx

https://www.microsoft.com/en-us/learning/on-demand-course-partners.aspx

05 Jul 2017

I have started using Bash for Windows as part of my workflow. For a long time, I have been using the standard command prompt with Git Bash tools hooked up to my path (using chocolately to install the tools in one command choco install git -params '"/GitAndUnixToolsOnPath"'). This was nice because I could use the shortcut Windows Key + X then C to open a command prompt and get access to Linux like tools such as ls, git and grep.

Now that I am using Bash for Windows I wanted to have a shortcut set up as well. I found it was really easy to set up using the Shortcut key setting on the shortcut properties window. This process could be used for any application that has a shortcut setup. The basic steps are:

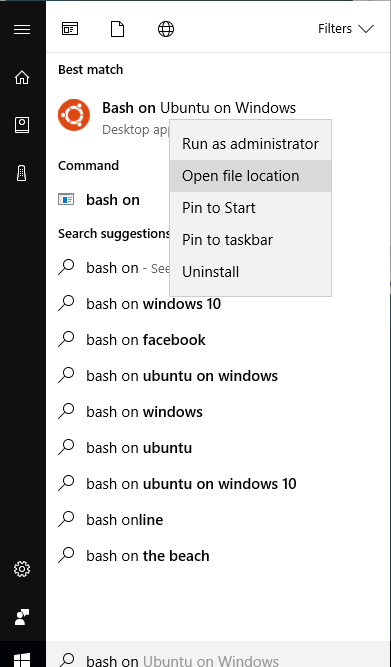

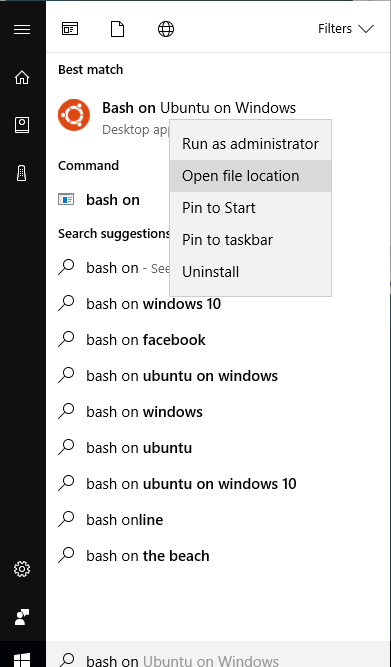

- Find the shortcut by

Windows Key the type bash

- Right click on the shortcut and select

open file location

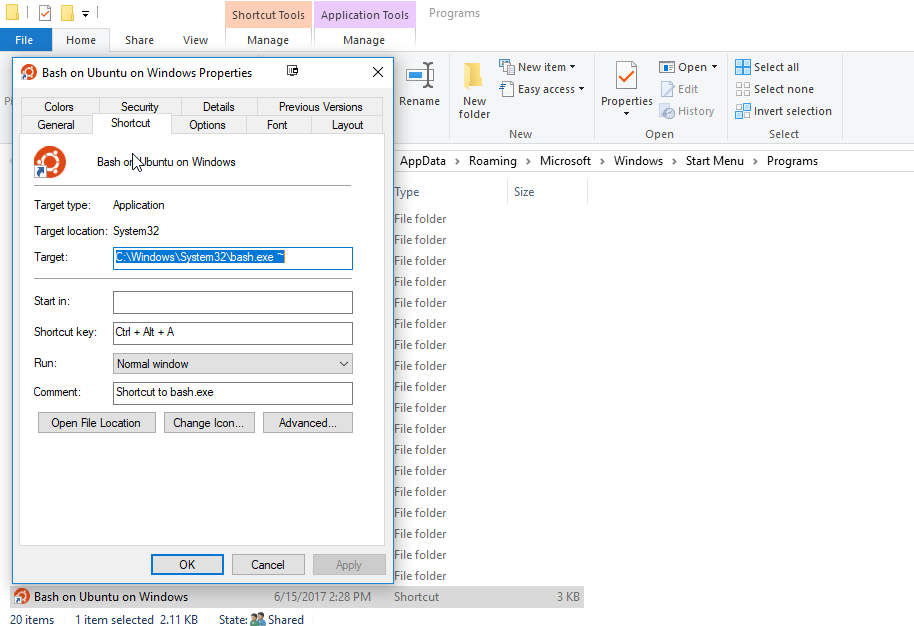

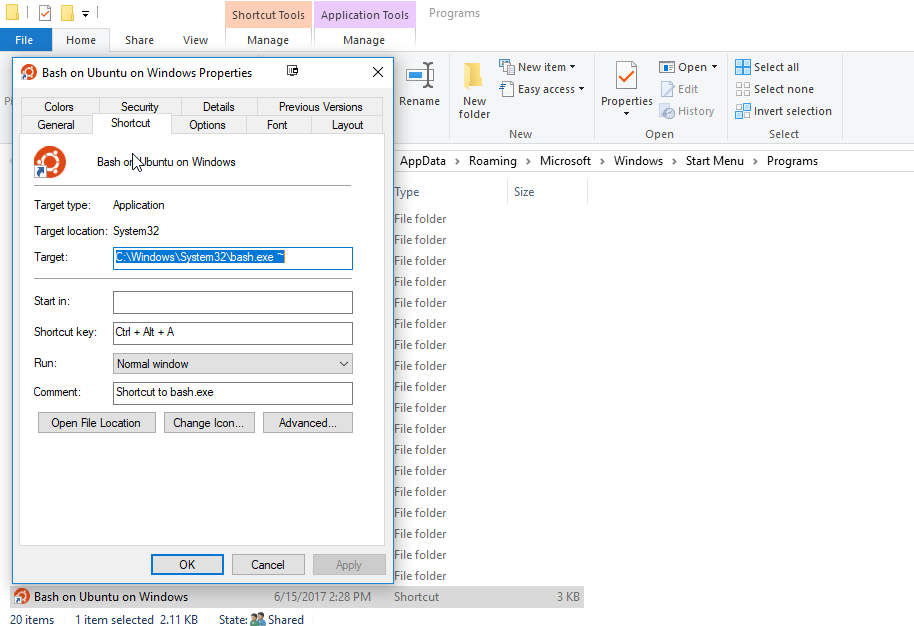

- Right click on the shortcut in the folder and select

Properties

- Select

Shortcut key and type your keyboard shortcut

06 Jun 2017

In Service Fabric, when your guest executable relies on a configuration file, the file must be put in the same folder as the executable. An example of an application that that needs configuration is nginx or using Traefik with a static configuration file.

The folder structure would look like:

|-- ApplicationPackageRoot

|-- GuestService

|-- Code

|-- guest.exe

|-- configuration.yml

|-- Config

|-- Settings.xml

|-- Data

|-- ServiceManifest.xml

|-- ApplicationManifest.xml

You may need to pass the location of the configuration file via Arguments tag in the ServiceManifest.xml. Additionally you need to set the WorkingFolder tag to CodeBase so it is able to see the folder when it starts. The valid options for the WorkingFolder parameter are specified in the docs. An example configuration of the CodePackage section of the ServiceManifest.xml file is:

<CodePackage Name="Code" Version="1.0.0">

<EntryPoint>

<ExeHost>

<Program>traefik_windows-amd64.exe</Program>

<Arguments>-c traefik.toml</Arguments>

<WorkingFolder>CodeBase</WorkingFolder>

<ConsoleRedirection FileRetentionCount="5" FileMaxSizeInKb="2048"/>

</ExeHost>

</EntryPoint>

</CodePackage>

Not the ConsoleRedirection tag also enables redirection of stdout and stderr which is helpful for debugging.

06 May 2017

There are two ways to create Visual Studio Team Services (VSTS) agents: Hosted and Private. Creating your own private agent for VSTS has some advantages such as being able to install the specific software you need for your builds. Another advantage that becomes important, particularly when you start to build docker images, is the ability to do incremental builds. Incremental builds lets you keep the source files, and in the case of the docker the images, on the machine between builds.

If you choose the VSTS Hosted Linux machine, each time you kick off a new build you will have to re-download the docker images your to the agent because the agent used is destroyed after the build is complete. Whereas, if you use your own private agent the machine is not destroyed, so the docker image layers will be cached and builds can be very fast and you will minimize your network traffic as well.

Note: This article ended up a bit long; If you are simply looking for how to run VSTS agents using docker jump to the end where there is one command to run.

Installing VSTS agent

VSTS provides you with an agent that is meant to get you started building your own agent quickly. You can download it from your agents page in VSTS. After it is installed and you can customize the software available on the machine. VSTS has done a good job at making this pretty straightforward but if you read the documentation you can see there are quite a few steps you have to take to get it configured properly.

Since we using docker, why not use the docker container as our agent? It turns out that VSTS provides a docker image just for this purpose. And it is only one command to configure and run an agent. You can find the source and the documentation on the Microsoft VSTS Docker hub account.

There are many different agent images to choose from depending on your scenario (TFS, standard tools, or docker) but the one we are going to use is the image with Docker installed on it.

Using the Docker VSTS Agent

All the way at the bottom of the page there is a description of how to get started with the agent images that support docker. One thing to note that it uses the host instance of Docker to provide the Docker support:

# note this doesn't work if you are building source with docker

# containers from inside the agent

docker run \

-e VSTS_ACCOUNT=<name> \

-e VSTS_TOKEN=<pat> \

-v /var/run/docker.sock:/var/run/docker.sock \

-it microsoft/vsts-agent:ubuntu-16.04-docker-1.11.2

This works for some things but if you using docker containers to build your source (and you should be considering it… it is even going to be supported soon through multi-stage docker builds), you may end up with some errors like:

2017-04-14T01:38:00.2586250Z �[36munit-tests_1 |�[0m MSBUILD : error MSB1009: Project file does not exist.

or

2017-04-14T01:38:03.0135350Z Service 'build-image' failed to build: lstat obj/Docker/publish/: no such file or directory

You get these errors because the source code is downloaded into the VSTS agent docker container. When you run a docker container from inside the VSTS agent, the new container runs in the context of the host machine because the VSTS agent uses docker on the host machine which doesn’t have your files (that’s what the line -v /var/run/docker.sock:/var/run/docker.sock does). If this hurts your brain, you in good company.

Agent that supports using Containers to build source

Finally, we come to the part you are most interested in. How do we actually set up one of these agents and avoid the above errors?

There is an environment variable called VSTS_WORK that specifies where the work should be done by the agent. We can change the location of the directory and volume mount it so that when the docker container runs on the host it will have access to the files.

To create an agent that is capable of using docker in this way:

docker run -e VSTS_ACCOUNT=<youraccountname> \

-e VSTS_TOKEN=<your-account-Private-access-token> \

-e VSTS_WORK=/var/vsts -v /var/run/docker.sock:/var/run/docker.sock \

-v /var/vsts:/var/vsts -d \ microsoft/vsts-agent:ubuntu-16.04-docker-17.03.0-ce-standard

The important command here is -e VSTS_WORK=/var/vsts which tells the agent to do all the work in the /var/vsts folder. Then volume mounting the folder with -v /var/vsts:/var/vsts enables you to run docker containers inside the VSTS agent and still see all the files.

Note: you should change the image name above to the one most recent or the version you need

Conclusion

Using the VSTS Docker Agents enables you to create and register agents with minimal configuration and ease. If you are using Docker containers inside the VSTS Docker Agent to build source files you will need to add the VSTS_WORK environment variable to get it to build properly.

28 Apr 2017

Docker Swarm introduced Secrets in version 1.13, which enables your share secrets across the cluster securely and only with the containers that need access to them. The secrets are encrypted during transit and at rest which makes them a great way to distribute connection strings, passwords, certs or any other sensitive information.

I will leave it to the official documentation to describe exactly how all this works but when you give a service access to the secret you essentially give it access to an in-memory file. This means your application needs to know how to read the secret from the file to be able to use the application.

This article will walk through the basics of reading that file from an ASP.NET Core application. The basic steps would be the same for ASP.NET 4.6 or any other language.

Note: In ASP.NET Core 2.0 (still in preview as of this writing) will have this functionality as a Configuration Provider which you can simply add the nuget and wire up.

Create a Secret

We will start by creating a secret on our swarm. Log into a Manager node on your swarm and add a secret by running he following command:

echo 'yoursupersecretpassword' | docker secret create secret_password -

You can confirm that the secret was created by running:

$ docker secret ls

ID NAME CREATED UPDATED

8gv5uzszlgtzk5lkpoi87ehql secret_password 1 second ago 1 second ago

Notice that you will not be list the value of the secret. For any containers that you explicitly give access to this secret, they would be able to read the value as a string at the path:

/run/secrets/postgres_password

Reading Secrets

Your next step is to read the secret inside you ASP.NET Core application. Before Docker Secrets were introduced you had a few options to pass the secrets along to your service:

Modifying your ASP.NET Core to read Docker Swarm Secrets

During development, you may not want to read the value from a Swarm Secret for simplicity sake. To accommodate this you can check to see if a Swarm Secret is available and if not read the ASP.NET Configuration variable instead. This enables you to load the value from any of the other configuration providers as a fallback. The code is fairly straight forward:

public string GetSecretOrEnvVar(string key)

{

const string DOCKER_SECRET_PATH = "/run/secrets/";

if (Directory.Exists(DOCKER_SECRET_PATH))

{

IFileProvider provider = new PhysicalFileProvider(DOCKER_SECRET_PATH);

IFileInfo fileInfo = provider.GetFileInfo(key);

if (fileInfo.Exists)

{

using (var stream = fileInfo.CreateReadStream())

using (var streamReader = new StreamReader(stream))

{

return streamReader.ReadToEnd();

}

}

}

return Configuration.GetValue<string>(key);

}

This checks to see if the directory exists and if it does checks for a file with a given key. Finally, if that key exists as a file then reads the value.

You can use this function anytime after the configuration has been loaded from other providers. You will likely call this function somewhere in your startup.cs but could be anywhere you have access to the Configuraiton object. Note that depending on your set-up you may want to tweak the function to not fall back on the Configuration object. As always consider your environment and adjust as needed.

Create a service that has access to the Secret

The final step is to create a service in the Swarm that has access to the value:

docker service create --name="aspnetcore" --secret="secret_password" myaspnetcoreapp

or in your Compose file version 3.1 and higher (only including relevant info for example):

version: '3.1'

services:

aspnetcoreapp:

image: myaspnetcoreapp

secrets:

- secret_password

secrets:

secret_password:

external: true

Creating an ASP.NET Core Configuration Provider

I am going to leave it to the reader to create an ASP.NET Core Configuration Provider (or wait until it is a NuGet package supplied with ASP.NET Core 2.0). If you decide to turn the code into a configuration provider (or use ASP.NET Core 2.0’s) you could simply use in you start.cs and it might look something like:

public Startup(IHostingEnvironment env)

{

var builder = new ConfigurationBuilder()

.SetBasePath(env.ContentRootPath)

.AddJsonFile("appsettings.json", optional: false, reloadOnChange: true)

.AddJsonFile($"appsettings.{env.EnvironmentName}.json", optional: true)

.AddEnvironmentVariables()

.AddDockerSecrets();

Configuration = builder.Build();

}

IMPORTANT: this is just an example the actual implementation might look slightly different.

Conclusion

Docker Secrets are a good way to keep your sensitive information only available to the services that need access to it. You can see that you can modify your application code in a fairly straight forward way that supports backward compatibility. You can learn more about managing secrets at Docker’s documentation.